Let AI penetrate into every PC - Interpretation of AMD Ryzen AI architecture and

Many industry insiders believe that AI may become the core of the fifth industrial revolution following the steam engine, internal combustion engine, electricity, and information technology. So how will AI enter our lives? For the vast majority of ordinary consumers, it naturally starts with the PCs and digital devices closest to us. As the most commonly used computing devices at present, PCs have unique advantages in the development of AI applications. PC chip giants represented by AMD have keenly identified this trend and have brought powerful AI computing capabilities to every PC by integrating dedicated NPU in the processor. So, what are the specific features of the NPU in AMD's Ryzen processors now? How does Ryzen AI work in coordination between software and hardware?

Innovative NPU Design

Specifically Accelerates AI Computing

When AMD launched the Ryzen 7040 series mobile processors, it introduced a brand-new independent NPU module. The full name of NPU is Neural Network Processing Unit, which is specifically designed to accelerate neural network calculations in AI computing. AMD integrated the first-generation XDNA architecture NPU in the Ryzen 7040 series mobile processors, and in the recently released Ryzen AI 300 series processors, AMD has brought a larger scale, more powerful, and brand-new NPU based on the XDNA 2 architecture. So, what exactly is the internal design and working principle of the NPU? And how will its emergence enhance the AI application performance of the processor?

Looking back at history, AMD's NPU has deep roots with Xilinx. In 2020, AMD announced the acquisition of Xilinx for $49 billion. After the acquisition, while AMD continued to promote the development of Xilinx's original FPGA-related assets and acceleration chips, it also further developed Xilinx's extensive intellectual property in AI acceleration through technological integration, including the focus of today's discussion: the NPU.

Neural Network Computing: A Significant Difference from Traditional Computing

There is a significant difference between neural network computing and traditional computing. Traditional computing, such as logical computing of CPUs and stream computing of GPUs, involves a definite start, complex judgment and calculation in the middle, and then a definite result. The essence of neural network computing is actually to find the relationship between input and output values, and then use this relationship, generally referred to as a model, for a simulation process. This means that neural network computing involves large-scale parallel computing of massive data and is different from the parallel computing of traditional GPUs.

Compared to traditional computing, there are many overall differences in neural network computing, but the main ones can be simply summarized in the following three aspects:Firstly, neural network computation is end-to-end computation, and due to the excessively large scale of much of the content throughout the computation process, it may be very difficult for humans to understand the reasons and results of each step of the computation. The significance here is that, for traditional computation, people can optimize each step, but the large amount of data movement designed for neural network computation requires extremely high bandwidth, so the traditional von Neumann architecture of the storage system may be at a disadvantage.

Secondly, neural network computation is not efficient enough for low-dimensional datasets such as efficiency, vectors, and matrices; only high-dimensional datasets like tensors can bring better parallel computing effects. Therefore, it is necessary to optimize tensor computation sufficiently, such as optimizing the processing methods for sparse matrices.

Thirdly, neural network computation also has some unique characteristics, such as the fact that the vast majority of computations do not require high precision, and even integer calculations like INT4 can yield reasonable results through addition and multiplication. Only a small part of the computations that affect the final result require high-precision formats like FP16 or BF16. Thus, how to use appropriate precision to improve computational efficiency is also a problem that neural network computation currently needs to face.

In light of the above three points, if optimization for neural network computation is to be carried out, it is inevitable to focus on the storage system, tensor computation, precision control, and support for more data formats.

AMD NPU: Optimized Specifically for Neural Network Computation

An NPU is a module of DSA (Domain Specific Architecture), which refers to an architecture specifically designed for a certain domain. DSA architecture is optimized for a certain type of computation, thus offering extremely high computational efficiency for that type of computation, but it may either be very inefficient or not support other types of computations at all. In contrast, we are familiar with general-purpose computing architectures such as Zen 5, Golden Cove, which are general-purpose computing devices capable of computing all tasks, but their efficiency may not be very high when it comes to specific domains. In current computer microarchitectures, there are very few devices that are absolutely general or absolutely DSA, and they are not exclusive but rather matched according to actual situations, such as SSE, AVX in CPU microarchitecture, which are essentially specialized instructions and execution units that strengthen the general-purpose CPU microarchitecture.

If an NPU is to be enhanced for neural network computation, it must largely conform to the three major characteristics of neural network computation mentioned earlier, and the AMD XDNA architecture has successfully completed these three optimizations. Let's see how it does it.Let's start by examining the macro architecture. XDNA employs an NPU architecture based on spatial data flow, which is macroscopically composed of a large array of AI computing units. Each AI computing unit includes a vector computation module, a scalar computation module, and a local data storage module. Additionally, within XDNA, there are storage blocks, computation blocks, and storage blocks, all interconnected using a bus architecture similar to a Mesh, and ultimately connected to the AIE-ML Array Interface, forming a complete NPU unit. Multiple NPU units combine to form the entire NPU.

Looking closer, each NPU unit in XDNA is composed of enhanced vector units that support VLIW and SIMD, optimized for machine learning and advanced signal processing applications. Beyond the computational aspects, the NPU unit also includes a storage module known as DM, which contains 64KB of cache and two DMAs. This cache can be used to store data, weights, activation functions, and some coefficients. Furthermore, the included RISC scalar processor can support different types of data communication.

Does the XDNA architecture meet the characteristics of AI computing we mentioned earlier? It certainly does. Firstly, the internal architecture of XDNA adopts a design that integrates computation and storage. Each AI computing unit includes both computation and storage components, which largely avoids the back-and-forth data movement and the process of components like GPUs constantly using an integrated bus to fetch data on a large scale. This significantly saves energy, improves energy efficiency, and enhances computational efficiency.

Secondly, in response to the characteristic of frequent matrix and tensor computations, the XDNA architecture is designed with a matrix of numerous AI computing units, tailored to serve tensor and matrix computations, complementing each other perfectly. Thirdly, the XDNA architecture can support models related to CNN, RNN, and LSTM, support data formats such as INT8, 16, 32, and BF16, and supports fine-grained clock gating technology for better energy efficiency control and concurrent multi-computational streams. This fully aligns with the overall characteristics of AI computing.

Therefore, through the XDNA architecture and the corresponding NPU, AMD has brought dedicated AI accelerated computing capabilities to the Ryzen processors. When facing lightweight AI computations, AMD Ryzen processors no longer need to call upon discrete or integrated graphics cards, nor do they need to use the CPU for generalized but inefficient computations. The NPU can directly complete the relevant tasks, also bringing extremely high energy efficiency, freeing up CPU and GPU resources, and improving the overall efficiency of the processor's operation.

Ryzen AI

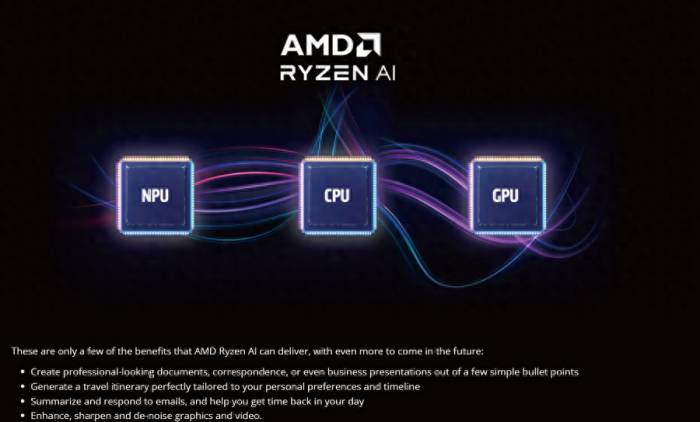

Efficient computing achieved by the trinity of CPU, GPU, and NPU.In addition to the corresponding NPU hardware architecture optimizations, AMD will continue to leverage the full-platform advantages of its CPU and GPU architectures in AI computing, enabling them to work in concert with the newly added NPU, resulting in a highly efficient hardware architecture platform that integrates CPU, GPU, and NPU.

AMD has conducted an analysis and interpretation of the Ryzen AI platform. For the NPU, the main goal is to enhance AI computing efficiency. Regarding the CPU, AMD's Ryzen processors offer excellent support for AVX-512 VNNI, which can significantly accelerate a multitude of AI workloads. As for the GPU, leveraging the AI acceleration advantages of Radeon GPUs, AMD can provide GPU-level AI acceleration for a wide range of software. By combining the characteristics of NPU, CPU, and GPU, the AMD Ryzen AI platform can accelerate AI computing for various types of devices, applications, and scenarios.

Based on its full-platform advantages of CPU, GPU, and NPU, AMD has also proposed several key features of the Ryzen AI platform, including performance, security features, energy efficiency, and cost-effectiveness. To achieve the best state, after the hardware platform is AI-ready, AMD's focus on the development of Ryzen AI is to expand the industry chain ecosystem support, preparing AI from the software and application levels.

To promote the development of applications and software support for the Ryzen AI platform, AMD has made significant progress in multiple aspects, including software platform and ecosystem construction. First, AMD has developed an open-source ONNX Runtime for the Ryzen AI platform and has brought support for three major software platforms: ONNX, TensorFlow, and PyTorch. The architectural diagram provided by AMD shows that after an application initiates an AI-related application, it can call upon the relevant hardware of the Ryzen AI platform through Quantize and ONNX Runtime. In this way, AMD has achieved a synergistic operation of software and hardware.

Furthermore, AMD is also promoting Ryzen AI on the development front. AMD has brought extensive enterprise support for Ryzen products, including numerous companies and industry software providers such as Adobe, Microsoft, AVID, OBS Studio, Zoom, TOPAZ Labs, AUDACITY, etc., who have optimized their software for the Ryzen AI platform. More importantly, AMD also claims that by the end of 2024, more than 150 ISV partners will launch applications or applications driven by Ryzen AI, including popular software like Photoshop and Premiere Pro, which have many application modules that utilize Ryzen AI-related technologies.AMD AI PCs Empower Diverse Industries

After addressing the integrated coordination of hardware and software, AMD has joined hands with OEM partners and ecosystem partners to invigorate the AI PC ecosystem. According to AMD, since the launch of the Ryzen AI PC in 2023, the company has been continuously laying out an AI ecosystem. Currently, millions of related products/systems have been shipped, offering a variety of PC designs in the market. AMD now possesses a complete AI solution from the cloud to the edge, fully supporting the deployment of AI capabilities at various levels to drive innovation.

As early as March 2024, AMD showcased the momentum of AMD AI PCs through a grand AI PC Innovation Summit. At that time, numerous OEM partners and over 100 ecosystem partners brought a wealth of AI application solutions, which not only covered industry-specific applications such as AI cultural heritage restoration, industrial design, medical imaging, programming, healthcare, education, and consulting, but also common consumer applications like content creation, office collaboration, and gaming entertainment.

Thanks to AMD's continuous ecosystem development, the AMD Ryzen AI PC now empowers a myriad of industries. AI application solutions for various sectors and different fields have been or will be implemented, and they are already capable of running or being deployed on the AMD Ryzen AI PC platform, allowing ordinary consumers to experience the charm of AI computing.

For instance, Adobe Lightroom and Photoshop software both support the use of Sensei AI and ML technology to enhance the image editing experience, supporting AI editing features such as lens blur, super-resolution, noise reduction, and sky replacement. The Lightroom lens blur tool can add depth-of-field blur effects to ordinary photos, with the effect achieved by blurring the background or foreground through Sensei's creation of a depth map. It now supports AMD Ryzen AI technology, meaning that ordinary consumers can experience such AI features on AMD Ryzen AI PCs.

In summary, judging from the current state of industry development, AMD is indeed at the forefront of the AI PC industry. The introduction of the NPU, the Ryzen AI platform, and the development of the ecosystem are all contributing to AMD's efforts to bring more excellent technologies and products to us in the AI era, making AI closer and more accessible to ordinary users.

Leave a comment